Chapter 1 + Section 2.1 Introduction

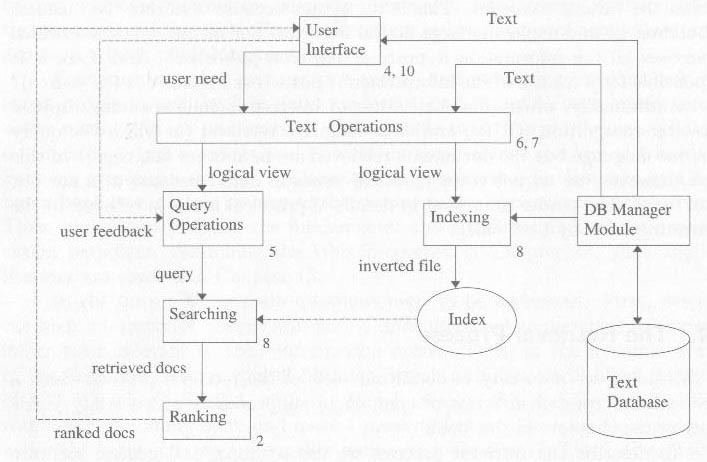

Information Retrieval Process

- Three Models of Browsing

- Flag

- Structure guided

- Hypertext

Section 2.2 A taxonomy of Information Retrieval Models

Predicting which documents are relevant is usaually dependent on a ranking algorithm.

- The three classic models in information retreival are:

Boolean Model: In the boolean model documents and queries are represented as sets of index terms, thus we say this model is a set theoretic model

Vector Model: In the vector model documents and queries are represented as vectors in a t-dimensional space, thus we say that the model is algebraic.

Probabilistic Model: The framework for modeling document and query representations is based on probability theory, and thus we sat that the model is prababilistic.

Section 2.3 Retrieval: Ad hoc and Filtering

The following is the formal definition for IR from MIR p 23.

Definition An information retrieval model is a quadruple

is a set composed of logical views (or representations) for the documents in the collection. is a set composed of logical views (or representations) for the user information needs. Such representations are called queries is a framework for modeling document representations, queries and their relationships. is a ranking function which associates a real number with a query and a document representation . Such ranking defines an ordering among the documents with regard to the query .

Section 2.5.1 Basic Concepts of Classic IR

Each document is described by a set of representative key workds calle index terms.

- Index terms are usually nouns. Why? Because verbs, adjectives connectives etc. have little meaning on their own.

- Index terms have weights described as follows:

Definition Let

Section 2.5.2 Boolean Model

- index terms are weighted either 0 or 1.

Queries are expressed in DisjunctiveNormalForm of the

can be written in disjunctive

normal form as

Definition For the Boolean model, the index term weight variables are all binary ie.e,

If

Section 2.5.3 Vector Model

- Weights are non-binary that is real numbers

- This allows us to calculate a degree of similarity.

Definition For the vector model, the weight $w_{i,j}$ associated with a pair

Thus we have a document

attachment:csce810_2_2.png

Figure 2

Having defined similarity the we now have a method for ranking and we can determine what is relevant by setting a threshold on the degree of similarity. This is essentially a clustering algorithm that clusters documents similar to the query in one set and documents dissimilar to the query in the other set. We say the IR problem can be reduced to a clustering problem determining what documents belong to the relevant set A and which do not. There are two main issues to resolved in a clustering problem:

intra-cluster similarity --> term frequency (tf): One needs to determine what are the features which better describe the objects in set A.

intra-cluster dissimilarity --> inverse document frequency (idf): One needs to determine what are the features which better distinguish the objects in set A from the remaining objects in collection C (the set of all documents).

Definition

Let

where the maximum is computed over all terms which are mentioned in the text of the document

The best known term weighting schemes use weights which are given by

or by a variation of this formula. Such term-weighting strategies are called

One such term wights formula was given by Salton and Buckley as

Advantages

- Term-weighting scheme improves retrieval performance

- Partial matching allows retrieval of documents that approximate the query conditions

- The cosine ranking formula sorts the documents according th their degree of similarity

Disadvantages

- Does not account for term dependencies

- Answer sets are difficult to improve without query expansion or relevance feedback.

Section 2.5.4 Probabilistic Model

Main Idea: Given a user query there is a set of documents which contains exactly the relevant documents and no other.

- There exists an ideal answer set to a query.

Given a set of index terms we guess at the properties of the ideal set and retrieve that set. This becomes the guess or initial set of documents.

Assumption (Prababilistic Principle):

Given a user query

Given a query

which computes the odds of the document

Definition

For the probabilistic mode, the index term weight variables are all binary i.e.,

Using Bayes' rule,

For more info see P32 MIR. They assume that

If we leave off

Assuming independence of index terms,

where:

In the very beginning (i.e. immediately after the query specification), there are no retrieved documents. Thus, one has to make simplifying assumptions such as

- assume that

is constant for all index terms - assume that the distribution of index terms among the non-relevant documents can be approximated by the distribution of index terms among all the docuemnts in the collection.

From these two assumptions we get:

where

- We can approximate

by the distribution of index term among the documents retrieved so far - We can approximate

by considering all the non-retrieved documents as not relevant.

From these two assumptions we get:

This process can be repeated without any human intervention to improve our guesses for the probabilities. For small values of

or